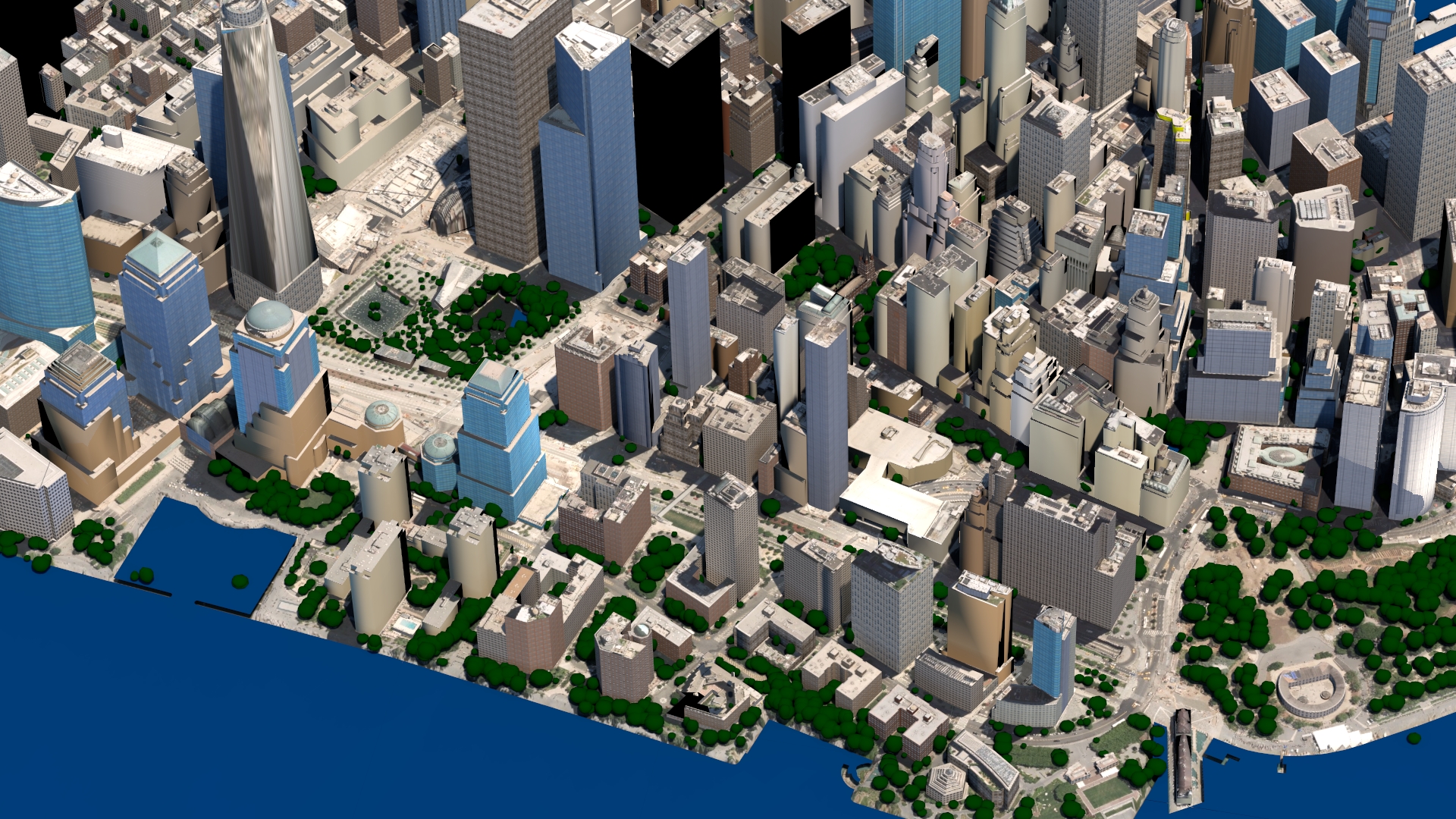

Geopipe wants to make it possible for anyone to interact with, modify, explore, and be immersed in the real world, in virtual space.

How I Started

Geopipe automatically reconstructs semantically rich virtual 3D models of the real world for simulations, gaming, and architecture. We teach computers to understand raw sensor data and deduce the properties of the objects that make up real environments.

How I Built This

We’ve been interested in this problem for about five years, ever since our first personal projects trying to put the real world into video games. We founded Geopipe nearly two years ago to explore creating virtual models of the real world for architecture, games, simulations, and beyond. We’ve been fortunate to be supported by the NYC Media Lab (itself supported by the EDC), NYU’s Leslie Entrepreneurs’ Lab, Techstars NYC, and a grant from the National Science Foundation. Over this time, we’ve been building a complex set of software with new and existing techniques to ingest data, then analyze and understand the world and turn it into semantically rich data and 3D models. We’ve also been designing a business around providing the data and models to our customers. For example, architects and developers can more easily show communities how a new building or master plan will look like in the context of the world around it. Companies designing self-driving cars can safely test their vehicles in a virtual copy of a real city. Gamers can explore and play in their city, in virtual space. We use various Open Data assets to train our system to recognize objects like buildings, trees, roads, sidewalks, and much more, both in NYC and in other cities.